Challenging Sources: A New Dataset for OMR of Diverse 19th-Century Music Theory Examples

Figure taken from the paper.

Figure taken from the paper.Abstract

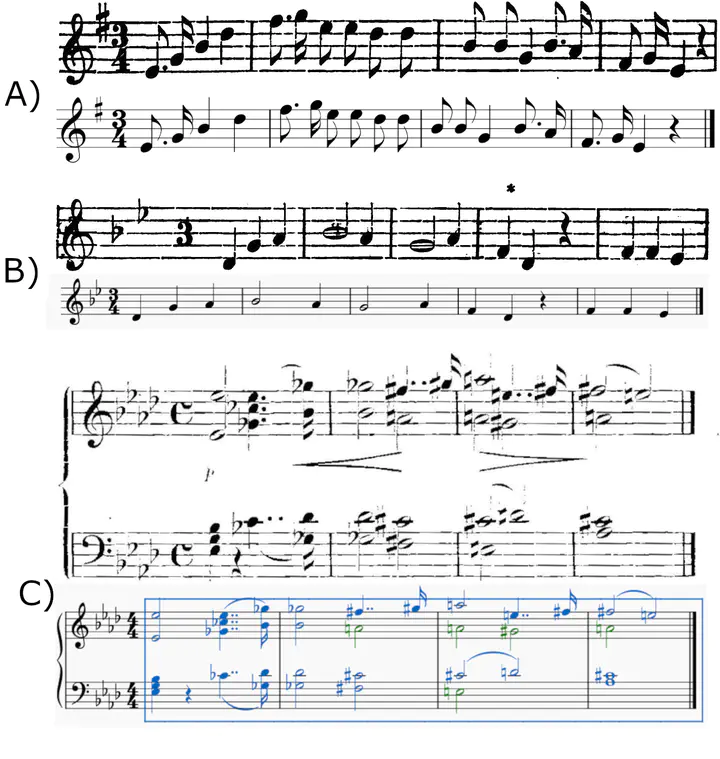

A major limitation of current Optical Music Recognition (OMR) systems is that their performance strongly depends on the variability in the input images. What for human readers seems almost trivial—e.g., reading music in a range of different font types in different contexts—can drastically reduce the output quality of OMR models. This paper introduces the 19MT-OMR corpus that can be used to test OMR models on a diverse set of sources. We illustrate this challenge by discussing several examples from this data set.

Type

Publication

In Proceedings of the 4th International Workshop on Reading Music Systems